Download ML thing.

make new venv.

pip install -r requirements.txt.

pip can’t find the right versions.

pip install --update pip.

pip still can’t find the right versions.

install conda.

conda breaks for some reason.

fix conda.

install with conda.

pytorch won’t compile with CUDA support.

install 2,000,000GB of nvidia crap from conda.

pytorch still won’t compile.

install older version of gcc with conda.

pytorch still won’t compile.

reinstall the entire operating system with debian 11.

apt can’t find shitlib-1.

install shitlib-2.

it’s not compatible with shitlib-1.

compile it from source.

automake breaks.

install debian 10.

It actually works.

“Join our discord to get the model”.

give up.It feels like you stood behind me yesterday, taking notes.

This comment gives me ptsd

thats when you do

/usr/bin/python3.11 -m pip installmother. fucking. hardcoded paths. 1 step forward, 10 steps backward.

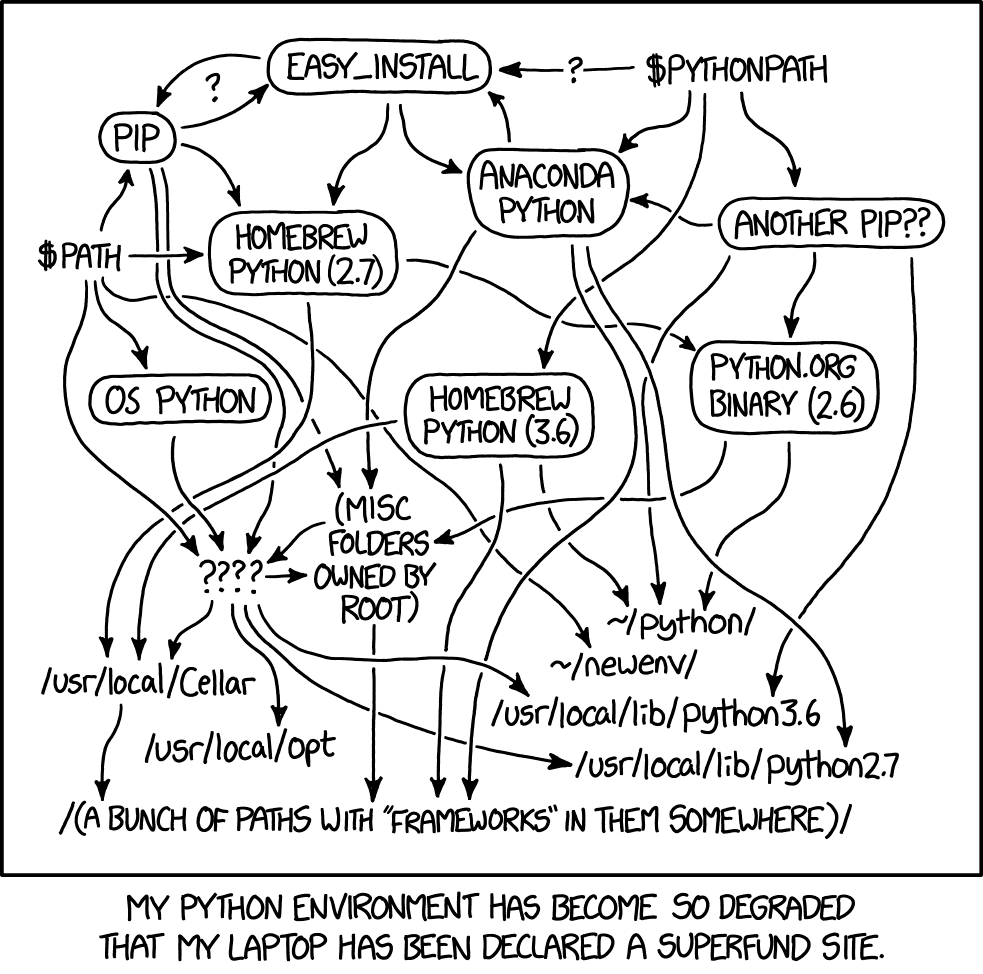

Feels very validating to see that everyone else’s Python is held together by a thread too.

Don’t forget poetry!

I started using poetry on a research project and was blown away at how good it is. Next week I start a new job and I am hoping it is the standard.

Now combine it with Nix and you’re on the happy path

I’ve recently discovered pipenv, and it has been a massive QoL improvement. No need to figure out bazillion of commands just to create or start an environment, or deal with what params should you use for it like you do with venv. You just pipenv install -r requirements.txt, and everything is handled for you. And when you need to run it, just pipenv run python script.py and you are good to go.

The best thing however are the .pipfiles, that can be distributed instead of requirements.txt, and I don’t get why it’s not more common. It’s basically requirements, but directly for pipenv, so you don’t need to install anything and just pipenv run from the same folder.

Yessssss

I actually wrote a script to make a folder an instant pipenv environment for me. Add it to your ./.zshrc. Has saved me a ton of time, I just removed some spaghetti lines that would reinstall pip and shit because it’s when I was still early days into Py dev, now I work more with Py than I do C# and I’m a senior C# engineer, I just enjoy the masochism of py.

Also added a check for Arch/Ubu.

# Automated python virtual environment. ####################################### VENV(){ if ! [ -x "$(command -v pipenv)" ]; then echo "pipenv not installed... installing it now!" sudo pip install pipenv OS="$( ( lsb_release -ds || cat /etc/*release || uname -om ) 2>/dev/null | head -n1 )" if [[ "$OS" == *"buntu"* ]]; then sudo apt install pipenv -y elif [[ "$OS" == *"rch"* ]]; then sudo pacman -S pipenv fi pip install pipenv --upgrade echo "Installation complete!" fi if [ -n "$1" ]; then echo -e "Args detected, specifically using version $1 of python in this project!" version="$1" else version=$(python -V) version=$(echo "$version" | sed -e 's/Python //') if [ -z "$version" ]; then version=$(python3 -V) if [ -z "$version" ]; then echo "No python version installed... exiting." return fi fi fi echo -e "\n===========\nCreate a Python $version virtual environment in $PWD/.venv [y/n]?\n===========" read -r answer case $answer in [yY][eE][sS]|[yY]) export PIPENV_VENV_IN_PROJECT=1 pipenv --python "$version" pipenv install -r ./requirements.txt echo -e "\n\n\nVirtual python environment successfully created @ $PWD/.venv!\n" echo -e "To run commands from this dir use 'pipenv run python ./main.py'" echo -e "To enter a shell in this venv use 'pipenv shell'." echo -e "To install from a requirements text file use 'pipenv install -r requirements.txt'" echo -e "To update pip + all pip modules use 'pipenv update'!\n" echo -e "Additional information can be found @ https://pipenv-fork.readthedocs.io/en/latest/basics.html" ;; [nN][oO]|[nN]) echo "Fine then weirdo why did you run the command then, jeez.Exiting" ;; *) echo "Invalid input..." ;; esac }I’ve been burned by

pipenvbefore on a large project where it was taking upwards of 20 minutes to lock dependencies. I think these days they usepoetryinstead, but I’ve heard the performance is still not very scalableWith that said, I think it can be a nice addition, but I think it comes down to Python packages not really taking dependency management as a top priority instead of favoring flexibility. This forces a package manager to download and execute the packages to get all the dependency information. Naturally, this is a time-consuming process if the number of packages is large.

On multiple instances I’ve seen projects abandon it for

pipand arequirements.txtbecause it became unmanageable. It’s left a bad taste in my mouth. I don’t like solutions that claim to solve problems but introduce new ones.

Poetry is nice for this but honestly, Rust’s cargo and the JS npm/yarn have spoilt me.

As a mac user I feel this

Original: https://xkcd.com/1987/

This is useless. Why don’t you start a community for google searches?

I didn’t start it.

I joined it, and linked it, because I liked getting my xkcd’s in my reddit feed from r/xkcd, because that meant there were comments to engage with. How the fuck is that useless? Why the animosity?

Like actually, how is the mere mention of a community enough for you to turn mean?

Edit: Wow. You’re an instance admin. Is this how you conduct yourself? Do you go deleting communities created by users on your instance, if you don’t personally see value in them?

Sorry to have been so mean. I simply think starving a website of visits is not great.

Do you go deleting communities created by users on your instance, if you don’t personally see value in them?

That is one hell of a jump to conclusions.

Honestly, at this point I’m running all my python environments in different docker containers. Much easier to maintain.

Wait, you guys don’t have a vm for each project and just use ssh to work on them?

I wish I could do that, but my employer switches me around so much that I’d be out of disk space in no time.

How does the workflow works in practice? You just use the containers to compile your code, or do you actually have a whole dev environment with IDE and everything and work directly in the container? I can’t imagine how does the workflow looks. Or is it possible to set up i.e. a JetBrains Rider to always spin up a container to compile the code in it? But then, if all the requirements and libraries are only on the container, how would it be able to do syntax highlithing and Intelisense (or what’s the correct work for code completion), if it doesn’t have the libraries on the host?

I’m probably missing something, but all the solutions I can figure out with my limited experience have issues - working on IDE in a VM sounds like a nightmare with moving files between VM and host, and the whole “spin up a VM, which takes time and it usually runs slower on the shitty company laptop, just to make a quick edit in one project”. And I feel like setting up an IDE to use environment that’s in a VM, but the IDE runs on a host sounds like a lot of work with linking and mounting folders. But maybe the IDEs do support it and it’s actually easy and automated? If that’s the case, then I’ll definitely check it out!

Check out dev container in VSCode. Even better with Codespaces from Github. You can define the entire environment in code, including extensions, settings, and startup scripts along with a Docker container. Then it’s just one button click and 5 min wait until it’s built and running. Once you have built it you can start it up and suspend it in seconds, toss it out when you don’t need it, or spin up multiple at once and work on multiple branches simultaneously.

Thank god for NixOS. (My daily on my laptop, seriously flakes +

nix-direnvis godsend for productivity. Reliable development environments and I don’t have to lift a finger!)Do you have any troubles running it as your daily OS? Do you use it as your hobby or also for your work?

I know Nix and use it as my package manager, but I’m not sure about the experience with NixOS. So I’m still reluctant to make the switch.

I just jumped in headfirst. I love it. It’s really just Nix, but with options to configure your whole system to your liking.

Stability’s been rock-solid and I haven’t yet encountered anything truly headache-inducing.

Here’s some starter advice:

- Try to start with flakes. Nix channels are known for being…unreliable at times.

- Start small, slowly extend. Many people’s Nix configs are often insanely abstracted and modularized. Personally, I started my flake config by installing KDE + Nix, and then linking the

configuration.nixto the flake. (Remember, flakes just package the config, they’re not responsible for configuring the system).

My Nix config is relatively basic (check it out here, so feel free to look around trying to understand it. I’d also suggest using Home Manger if you aren’t already.

The NixOS forums are great for getting help. I’ll also point you towards the Catppuccin Discord server, the NixOS thread there is filled with many helpful people who helped me get started. (If you decide to swallow the Nix pill, feel free to join and ping me(my username’s Dukk); I’ll add you to the Nix thread).

Throw pyenv in there and add some more complexity!

Hopefully Mojo will sort it all out. Maybe even inspiring a new, positive streak of xkcd strips in the future?

Now take all of this and find something that needs an old out of date Python… Like 2.6.

… cry later

My workflow:

cd project python -m venv .venv . ./.venv/bin/activate pip install -e .By default pyvenv excludes system packages, so I can have different versions in the venv. To reset the venv, I just have to delete the .venv dir.

I’ve been using pipenv for a good while but I’ve started to move over to venv slowly, and I like it so far. It’s a bit more of manual work but I feel like it’s worth it.

What did you not like on pipenv in comparison to venv? I was always avoiding venv because it was, as you said, manual work and it was too much effort to again google what was the order of commands and parameters to start a venv, which is not an issue in pipenv, since you just pipenv install what you need.

We had some issues in our CI where pipenv would sometimes fail to sync. It has recently gotten better, I think due to a fix of some race condition due to parallel installation. I think

venvwould be better suited for CI in general, since it allows the use of a simplerequirements.txtfile.The other thing is I think it is rather slow, at least on windows which most of my team uses.

To conclude, I think as long as you aren’t having any trouble and it simplifies your environment, you might as well use it.

I only use python as a go-to scripting language when I need to quickly automate something or write a quick throwaway script that requires an SDK, since there’s a python library for almost anything and doing it in powershell would be too much aditional work. But it does make sense that for CI you only need to figure out the venv setup once and you’re done, so it may be a better solution.